<hi #008000>Finished</hi>

Create your own Keypair using the web interface to be able to connect to the instances you will be creating.

The key can be created during the creation procedure of the first VM. I gave my key “vic” as name. The procedure of creating a key in this case is totally automatic. What I need to do is following the link it gives out and save my private key on my machine. I need the private to later to be able to connect (SSH) to my machine, with or without a password. For some VMs on the Amazon EC a password is used in combination with private/public key crypto, for others, like debian distro (amazon brand it now as amazon, but it is actually running debian) it does not need a password.

Add two simple Fedora L.A.M.P. Web Starter instances (small, 1.7GB), use your previously created keypair for both

Please see the work log. I created two LAMP in the starndard instances recommended by Amazon. But the LAMP service does not seem to be fully running on those instances. So I removed the instances and re-create using the community build. If you search in the community instance, there is only one Fedora running LAMP which works quite well.

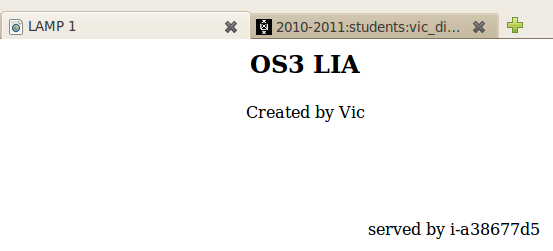

Use ssh to connect to both instances and change the web page to look like figure 1, where # is a unique single digit id number for each instance.

The “served by” tag is quite clear, we can later see which server is actually serving the page. And I put the machine name in the page title. So the title bar can tell us easily which server is responding to the page request.

Test each webserver instance using the DNS reference provided by Amazon. Measure the response time for each instance

I use command ab to test the server. ab is apache benchmark tool. It can generate user defined large amount of request in user defined amount of concurrency to test a specific web server.

I used the following parameters within the command

vding@fx160-14:~/Downloads$ ab -n 50000 -c 500 http://ec2-46-137-1-222.eu-west-1.compute.amazonaws.com/index.php

- -n 20000 - total amount of requests going to be sent. (originally I set this to 50000 but one of the target server return failure, so I lower it to 20000

- -c 500 - number of requests going to be sent in a concurrent way

My results as following:

LAMP1 (server1)

Time per request: 372.297 [ms] (mean) Time per request: 0.745 [ms] (mean, across all concurrent requests) Transfer rate: 729.21 [Kbytes/sec] received Connection Times (ms) min mean[+/-sd] median max Connect: 21 187 796.5 26 9047 Processing: 22 152 457.5 87 7390 Waiting: 21 149 457.2 86 7389 Total: 47 339 985.2 127 10413

LAMP3 (server2)

Time per request: 340.807 [ms] (mean) Time per request: 0.682 [ms] (mean, across all concurrent requests) Transfer rate: 798.03 [Kbytes/sec] received Connection Times (ms) min mean[+/-sd] median max Connect: 21 203 757.3 49 9113 Processing: 21 109 137.2 88 1994 Waiting: 21 108 137.2 86 1994 Total: 43 312 797.1 142 9435

What we need is mostly the response time. So more comprehensively we can use the following data extract from the complete data above.

#lamp1 Time per request: 372.297 [ms] (mean) Time per request: 0.745 [ms] (mean, across all concurrent requests) #lamp3 Time per request: 340.807 [ms] (mean) Time per request: 0.682 [ms] (mean, across all concurrent requests)

From this result, we can say that lamp1 can handle more concurrent and requests in large volume, but response slower. It maybe on a link which has a lot of capacity but several more hops away from us. lamp3 failed two time in 50000 requests. But it response time is slightly better than from lamp1.

Test the loadbalancing webserver using the (correct) DNS reference provided by Amazon.

I ran the same ab test on the public DNS name of my load balancer. It is working (responding to requests), but it does not say anything about its behavior. In the following code, you can find the mean time of response of the load balancer.

#result Time per request: 613.916 [ms] (mean) Time per request: 1.228 [ms] (mean, across all concurrent requests)

Which server is responding? And when?

I will explain my approach, please see the test procedure in the work log. Now we test which server is responding to the request within the load balancer. There are two servers, LAMP1 and LAMP3 in the load balancer. I use a piece of code to generate 10 requests (page 1.html to 10.html) to the load balancer. I can see in the access log of LAMP1 that page 1.html, 3.html, 5.html, 7.html, 9.html are served by it, and all the evenly numbered pages are served by LAMP3 (server2). This means that the load balancer is distributing the load evenly. (The load balancer actually works in a round-robin way, but from this test we can only conclude that it distributes load evenly. The round robin way of working will be demonstrated in the tests below)

Code used in the test. It generates 10 requests and send to null device. This will generate 10 404 errors together with 10 pages in the access log.

#!/bin/sh for i in `seq 1 10`; do wget http://vic-http-530589179.eu-west-1.elb.amazonaws.com/$i.html > /dev/null next

Result from both of the access log

LAMP1, indeed we can see that all the oddly number pages are served here.

#/var/log/httpd 10.234.91.224 - - [15/Feb/2011:10:29:29 -0500] "GET /1.html HTTP/1.1" 404 316 "-" "Wget/1.12 (linux-gnu)" 10.234.91.224 - - [15/Feb/2011:10:29:29 -0500] "GET /3.html HTTP/1.1" 404 316 "-" "Wget/1.12 (linux-gnu)" 10.234.91.224 - - [15/Feb/2011:10:29:29 -0500] "GET /5.html HTTP/1.1" 404 316 "-" "Wget/1.12 (linux-gnu)" 10.234.91.224 - - [15/Feb/2011:10:29:29 -0500] "GET /7.html HTTP/1.1" 404 316 "-" "Wget/1.12 (linux-gnu)" 10.234.91.224 - - [15/Feb/2011:10:29:30 -0500] "GET /9.html HTTP/1.1" 404 316 "-" "Wget/1.12 (linux-gnu)"

LAMP3, all the evenly number pages are served here

#var/log/httpd 10.234.91.224 - - [15/Feb/2011:10:29:29 -0500] "GET /2.html HTTP/1.1" 404 316 "-" "Wget/1.12 (linux-gnu)" 10.234.91.224 - - [15/Feb/2011:10:29:29 -0500] "GET /4.html HTTP/1.1" 404 316 "-" "Wget/1.12 (linux-gnu)" 10.234.91.224 - - [15/Feb/2011:10:29:29 -0500] "GET /6.html HTTP/1.1" 404 316 "-" "Wget/1.12 (linux-gnu)" 10.234.91.224 - - [15/Feb/2011:10:29:29 -0500] "GET /8.html HTTP/1.1" 404 316 "-" "Wget/1.12 (linux-gnu)" 10.234.91.224 - - [15/Feb/2011:10:29:30 -0500] "GET /10.html HTTP/1.1" 404 317 "-" "Wget/1.12 (linux-gnu)"

Measure the response time.

This is the response time as mentioned above, I paste the result again here

#result Time per request: 613.916 [ms] (mean) Time per request: 1.228 [ms] (mean, across all concurrent requests)

Now generate a continuous load (of work) on the most responsive of the instances. How does this influence the loadbalancer?

I use the huge amount of requests in the ab command to flood LAMP3 (server2). The response time of the LAMP3 became very long. But this does not change the behavior of the load balance, it is still distributing the load evenly, even when one of the server is extremely busy.

Measure the response time both on the loadbalancer as well as on the separateinstances.

Please see the test result below

#load balancer Time per request: 229.334 [ms] (mean) Time per request: 0.459 [ms] (mean, across all concurrent requests) #lamp1 Time per request: 391.629 [ms] (mean) Time per request: 0.783 [ms] (mean, across all concurrent requests) #lamp3 #flooded Time per request: 466.372 [ms] (mean) Time per request: 0.933 [ms] (mean, across all concurrent requests)

Draw a conclusion from the results.

It is clearly shown that lamp3 is flooded. Its response time became much longer than before. However, the load balancer is still distributing the load evenly despite that LAMP3 is heavily loaded at the moment. And load balancer is doing better than both of the single machines. This clearly shows that load balancer is really working as its name suggests, distributing the loads, hence the response we got from it can be better than the single machine.

Setup a windows server. What are the advantages/disadvantages of a mixed setup?

The advantages are

- no lock in - As there are more than one kind of servers, we are not dependent on a specific solution or vendor.

- no single solution of failure - If there is problem with one solution hence it is down or has to be taken down, we still have other solutions. Like if there is a big bug found in windows server and we have to shut it down, we can still run Linux server smoothly.

- customer friendly - By providing more possibility to the customer, we are more customer friendly. We do not require customer to have specific knowledge on our service. They can choose which solution they are more comfortable to work with.

Disadvantages are

- more solution, more problem - Any problem found in any of the solution can affect us.

- management burden - more time, effort and cost required on management.

- incompatibility among multi-vendors - Not necessarily all the solutions will work together

Re-test the loadbalancing webserver using the (correct) DNS reference provided by Amazon.

I added the windows server to the load balance and run the above test code again.

Which server is responding? And when?

As shown in the result below, now we can conclude that the load balancer is really working in a round-robin fashion. LAMP1 then LAMP3 then windows server, and loop again. Each of the server serves in turn.

#lamp1 10.224.71.85 - - [15/Feb/2011:13:13:21 -0500] "GET /1.html HTTP/1.1" 404 316 "-" "Wget/1.12 (linux-gnu)" 10.224.71.85 - - [15/Feb/2011:13:13:21 -0500] "GET /4.html HTTP/1.1" 404 316 "-" "Wget/1.12 (linux-gnu)" 10.224.71.85 - - [15/Feb/2011:13:13:21 -0500] "GET /7.html HTTP/1.1" 404 316 "-" "Wget/1.12 (linux-gnu)" 10.224.71.85 - - [15/Feb/2011:13:13:21 -0500] "GET /10.html HTTP/1.1" 404 317 "-" "Wget/1.12 (linux-gnu)" #lamp3 10.224.71.85 - - [15/Feb/2011:13:13:21 -0500] "GET /2.html HTTP/1.1" 404 316 "-" "Wget/1.12 (linux-gnu)" 10.224.71.85 - - [15/Feb/2011:13:13:21 -0500] "GET /5.html HTTP/1.1" 404 316 "-" "Wget/1.12 (linux-gnu)" 10.224.71.85 - - [15/Feb/2011:13:13:21 -0500] "GET /8.html HTTP/1.1" 404 316 "-" "Wget/1.12 (linux-gnu)" 10.224.71.85 - - [15/Feb/2011:13:13:21 -0500] "GET /11.html HTTP/1.1" 404 317 "-" "Wget/1.12 (linux-gnu)" #win2k8 IIS7 2011-02-15 18:13:21 10.234.23.86 GET /3.html - 80 - 10.224.71.85 Wget/1.12+(linux-gnu) 404 0 2 0 2011-02-15 18:13:21 10.234.23.86 GET /6.html - 80 - 10.224.71.85 Wget/1.12+(linux-gnu) 404 0 2 0 2011-02-15 18:13:21 10.234.23.86 GET /9.html - 80 - 10.224.71.85 Wget/1.12+(linux-gnu) 404 0 2 0 2011-02-15 18:13:21 10.234.23.86 GET /12.html - 80 - 10.224.71.85 Wget/1.12+(linux-gnu) 404 0 2 0

Measure the response time.

Herewith the response time of the load balancer

vding@fx160-14:~/Downloads$ ab -n 30000 -c 500 http://vic-http-530589179.eu-west-1.elb.amazonaws.com/ . Server Software: Apache/2.2.15 Server Hostname: vic-http-530589179.eu-west-1.elb.amazonaws.com Server Port: 80 . Time per request: 190.050 [ms] (mean) Time per request: 0.380 [ms] (mean, across all concurrent requests) .

And response time from the windows server

vding@fx160-14:~/Downloads$ ab -n 30000 -c 500 http://ec2-79-125-50-217.eu-west-1.compute.amazonaws.com/ . Server Software: Microsoft-IIS/7.0 Server Hostname: ec2-79-125-50-217.eu-west-1.compute.amazonaws.com Server Port: 80 . Time per request: 397.411 [ms] (mean) Time per request: 0.795 [ms] (mean, across all concurrent requests) .

If we compare the response of the load balancer from 2-server setting and the 3-server setting here. We can see that the performance (response time) is getting better when there are more servers added into the load balancer.

How much money did it cost? Specify this in detail related to the delivered services

In stead of trusting the usage report, I went to this pricing page and calculate manually the cost I generated in my experiment.

I use EU region so the price list should be

Standard On-Demand Instances Linux/UNIX Usage Windows Usage

Small (Default) $0.095 per hour $0.12 per hour

Standard Reserved Instances 1 yr Term 3 yr Term Linux/UNIX Usage Windows Usage

Small (Default) $227.50 $350 $0.04 per hour $0.06 per hour

Internet Data Transfer

The pricing below is based on data transferred "in" and "out" of Amazon EC2.

Data Transfer In US & EU Regions APAC Region

All Data Transfer $0.10 per GB $0.10 per GB

Data Transfer Out *** US & EU Regions APAC Region

First 1 GB per Month $0.00 per GB $0.00 per GB

Up to 10 TB per Month $0.15 per GB $0.19 per GB

Availability Zone Data Transfer

* $0.00 per GB – all data transferred between instances in the same Availability Zone using private IP addresses.

Regional Data Transfer

* $0.01 per GB in/out – all data transferred between instances in different Availability Zones in the same region.

Public and Elastic IP and Elastic Load Balancing Data Transfer

* $0.01 per GB in/out – If you choose to communicate using your Public or Elastic IP address or Elastic Load Balancer inside of the Amazon EC2 network, you’ll pay Regional Data Transfer rates even if the instances are in the same Availability Zone. For data transfer within the same Availability Zone, you can easily avoid this charge (and get better network performance) by using your private IP whenever possible.

The simple formula I can use here is that:

C = c1 * h1 + c2 * h2 + [c3 * M1] + [c4 * M2] * C is the total cost * c1 is the cost per hour for running an on-demand Linux instance and h1 is the amount of running time. * c2 is the cost per hour for running an on-demand Windows instance and h2 is the amount of running time. * [c3 * M1] is the sum of different Internet data transfer rate times the amount of corresponding data. * [c4 * M2] is the sum of different zone, region, public data transfer rate times the amount of corresponding data. In this case I do not have to compute the [c4 * M2], as I did not transfer any data in between the instances. * c1 = 0.095, and h1 = 8 hours (approx.) * c2 = 0.12, and h2 = 3 hours (approx.) * c3 = 363B (size of my html page) * 3 * 0.10/G (as I uploaded three time), And the tranfer-out is 0 since the first 1GB is for free. So, C = 0.095*8*2(two Linux server) + 0.12*3 + 0.000000003*3*0.1 approximately 5.28 Euro.

The actual cost should be higher than this as the amount of data for command and empty request are not included.

Give an estimate of the costs if the services are used for a year

I adapt the formula above and reuse it. The estimate of costs can be calculate as below

C = c1 + [c3 * M1] * c1 is the constant cost per year for reserved-instance and usage (hours times hour rate) * [c3 * M1] is the Internet data usage. Let's assume for 5GB per month on average for both in and out. C = constant cost for three servers + 2 linux server usage + 1 windows server usage + data transfer in and out C = 227.5 * 3 + 365 * 24 * ((2 * 0.04) + 0.06) + 5 * 0.1 * 12 (transfer-in for 5GB) + 4 * 0.15 * 12 (transfer-out for 5GB and 1GB free) = 682.5 + 1226.4 + 13.2 = 1922.1 Euro

The cost for running server in the cloud is lower than if you run it on own machine with same SLA. But it is definitely NOT A ALMOST FREE service as it calculates in the above section. Calculations should be carefully made before deploy the machines to the cloud.

(Bonus) Explain, for the three clouds, how you did it; or why it failed.

I haven't migrated HVMs to the three clouds mentioned in the assignment. But I think it is workable for all three of them. I am doing a project for LIA on OpenStack. As I can see in both of its document and Eucalyptus', they at least claim that it is possible to port among each other. Hence even if certain cloud does not work with Xen, we can use either OpenStack or Eucalyptus as a way to convert then make them compatible and work together.